Transferring Large Files: A Comprehensive Guide To Efficient Data Movement

In today's digital age, the efficient transfer of large files has become an essential aspect of various industries, ranging from media and entertainment to scientific research and data analysis. As file sizes continue to grow, the need for reliable and rapid data movement becomes increasingly critical. This comprehensive guide aims to provide an in-depth understanding of the best practices, technologies, and strategies involved in transferring large files, ensuring efficient and secure data transmission.

Understanding the Challenge of Large File Transfers

The transfer of large files presents unique challenges that require careful consideration and specialized solutions. Unlike smaller files, which can be easily emailed or shared via instant messaging platforms, large files demand more robust and sophisticated methods. Factors such as file size, network bandwidth, and transfer speed can significantly impact the efficiency and success of the transfer process.

One of the primary challenges is the potential for data corruption or loss during the transfer. As files increase in size, the risk of errors during transmission rises, leading to incomplete or damaged files. Additionally, network congestion and unreliable connections can further complicate the process, resulting in extended transfer times and potential delays.

Key Considerations for Efficient Large File Transfers

To ensure successful and efficient large file transfers, several critical factors must be taken into account. These considerations include:

- File Size and Complexity: Understanding the size and complexity of the files to be transferred is essential. Large files, such as high-resolution videos, 3D models, or large datasets, require specialized transfer methods to ensure data integrity and minimize transfer times.

- Network Infrastructure: The quality and capacity of the network infrastructure play a crucial role in determining the efficiency of large file transfers. Factors such as bandwidth, latency, and network congestion can significantly impact transfer speeds and overall performance.

- Security and Privacy: Transferring large files often involves sensitive or confidential data. Implementing robust security measures, such as encryption and access controls, is vital to protect data integrity and prevent unauthorized access during the transfer process.

- Reliability and Redundancy: Ensuring reliable and error-free transfers is essential. Employing techniques such as error correction, data verification, and redundancy can help mitigate the risks of data loss or corruption during the transfer.

- Scalability and Flexibility: As file sizes and transfer volumes grow, the chosen transfer solution must be scalable and flexible to accommodate future needs. The ability to handle increasing data volumes and support multiple transfer protocols is crucial for long-term efficiency.

Technologies and Strategies for Efficient Large File Transfers

Several technologies and strategies can be employed to enhance the efficiency and reliability of large file transfers. These include:

Optimized Transfer Protocols

Utilizing specialized transfer protocols, such as FTP (File Transfer Protocol), SFTP (Secure File Transfer Protocol), or HTTP/HTTPS, can significantly improve transfer speeds and reliability. These protocols are designed to handle large files efficiently, offering features like data compression, error correction, and secure encryption.

Accelerated Transfer Technologies

Accelerated transfer technologies, such as Aspera FASP (Fast, Adaptive, and Secure Protocol), leverage advanced algorithms and network optimization techniques to achieve ultra-fast transfer speeds. These technologies are particularly useful for transferring large files over long distances or in environments with limited bandwidth.

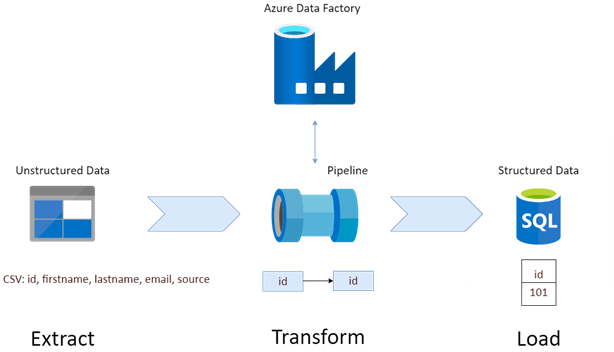

Cloud-Based File Transfer Services

Cloud-based file transfer services, such as AWS Transfer Family or Google Cloud Storage Transfer Service, offer scalable and secure solutions for large file transfers. These services provide robust security features, global availability, and seamless integration with other cloud-based applications.

Data Compression and Archiving

Compressing large files before transfer can significantly reduce their size, leading to faster transfer times and reduced network congestion. Advanced compression algorithms, such as LZMA or LZ4, can achieve high compression ratios without compromising data integrity.

Parallel and Multi-Threaded Transfers

Implementing parallel or multi-threaded transfer techniques allows for the simultaneous transfer of multiple file segments, increasing overall transfer speed. By dividing large files into smaller chunks and transferring them concurrently, these techniques can significantly reduce transfer times.

Transfer Monitoring and Management

Utilizing transfer monitoring and management tools provides real-time visibility into the transfer process, allowing for proactive issue resolution and optimization. These tools offer features such as transfer progress tracking, error detection and reporting, and advanced analytics for performance optimization.

Performance Analysis and Optimization

To ensure optimal performance and efficiency, regular performance analysis and optimization of large file transfer processes are essential. This involves:

- Network Performance Monitoring: Continuously monitoring network performance, including bandwidth utilization, latency, and packet loss, helps identify potential bottlenecks and optimize transfer speeds.

- Transfer Speed Testing: Conducting regular transfer speed tests provides valuable insights into the actual transfer speeds achieved, allowing for comparison against expected performance and identification of any performance degradation.

- Error Analysis and Mitigation : Analyzing errors and failures during large file transfers is crucial for identifying and resolving underlying issues. This includes investigating network errors, file corruption, or transfer protocol inefficiencies.

- Continuous Optimization: Based on performance analysis and feedback, continuous optimization of transfer processes is necessary. This may involve adjusting transfer protocols, fine-tuning network settings, or implementing new technologies to improve overall efficiency.

Future Implications and Emerging Technologies

As technology continues to advance, several emerging trends and technologies are poised to revolutionize large file transfers. These include:

- 5G and Beyond: The rollout of 5G networks and future generations of mobile connectivity promise significantly higher bandwidth and lower latency, enabling faster and more reliable large file transfers over wireless networks.

- Edge Computing and Content Delivery Networks (CDNs): Edge computing and CDNs bring computing resources and content closer to end-users, reducing network latency and improving transfer speeds. These technologies are particularly beneficial for distributing large files to multiple locations simultaneously.

- Blockchain and Decentralized File Storage: Blockchain technology and decentralized file storage solutions offer enhanced security, data integrity, and redundancy for large file transfers. By distributing data across multiple nodes, these technologies provide resilience against data loss and unauthorized access.

- Artificial Intelligence (AI) and Machine Learning: AI and machine learning algorithms can optimize large file transfers by predicting network congestion, identifying optimal transfer routes, and adapting to changing network conditions in real-time.

Conclusion

Efficiently transferring large files is a critical aspect of modern data management and collaboration. By understanding the challenges and implementing the right technologies and strategies, organizations can ensure reliable, secure, and rapid data movement. As technology continues to evolve, staying informed about emerging trends and adopting innovative solutions will be key to maintaining a competitive edge in the digital landscape.

What are the best practices for transferring large files securely?

+To ensure secure large file transfers, consider using encrypted transfer protocols such as SFTP or HTTPS. Additionally, implementing access controls, two-factor authentication, and data encryption at rest can further enhance security.

How can I optimize transfer speeds for large files?

+To optimize transfer speeds, consider utilizing accelerated transfer technologies like Aspera FASP. Additionally, optimizing network settings, such as TCP window size and congestion control algorithms, can improve transfer performance.

What are the benefits of using cloud-based file transfer services?

+Cloud-based file transfer services offer scalability, global availability, and robust security features. They provide seamless integration with other cloud-based applications and can handle large volumes of data efficiently.